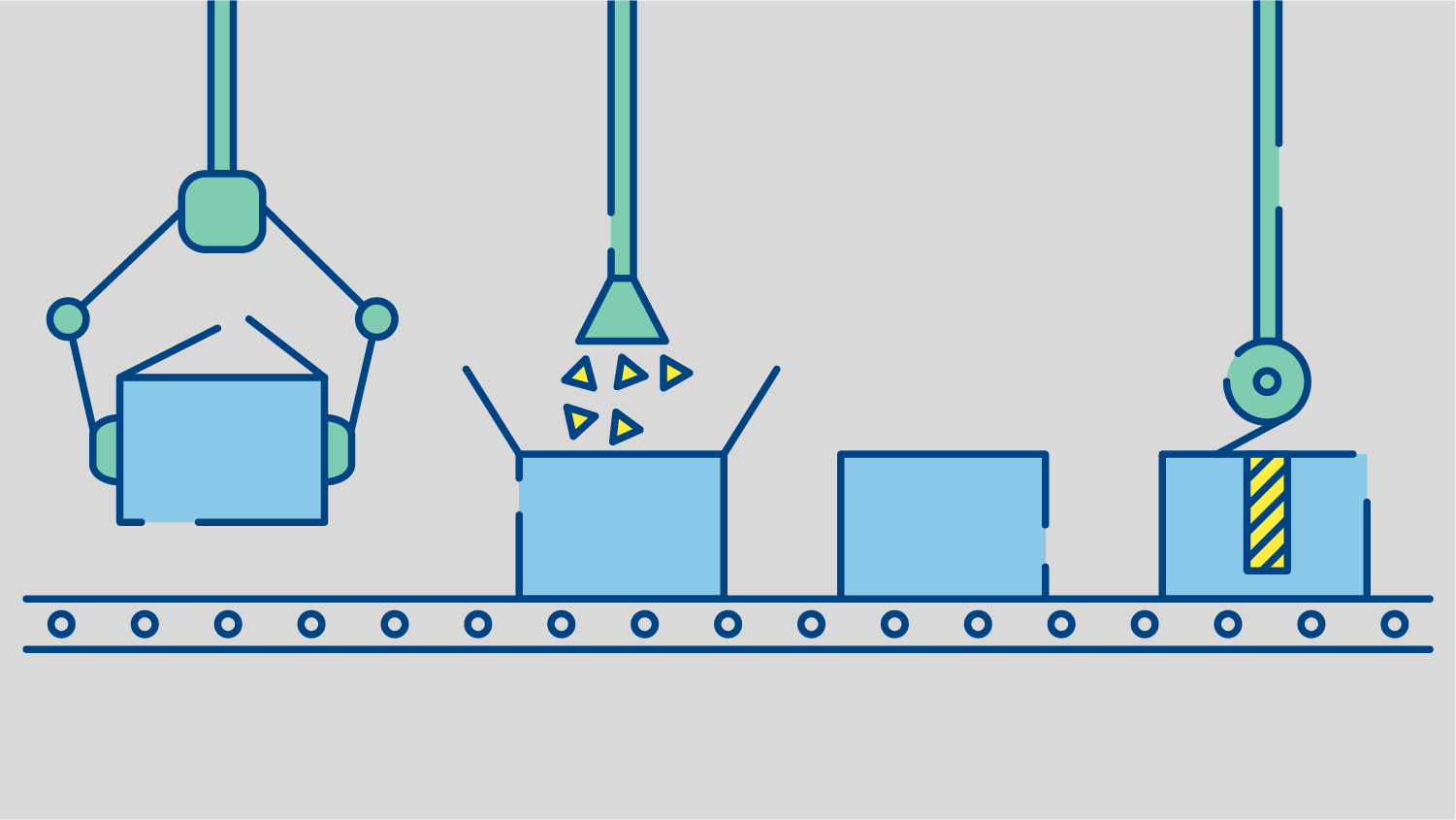

Hello from trivago's performance & monitoring team. One important part of our job is to ship more than a terabyte of logs and system metrics per day, from various data sources into elasticsearch, several time series databases and other data sinks. We do so by reading most of the data from multiple Kafka clusters and processing them with nearly 100 Logstashes. Our clusters currently consists of ~30 machines running Debian 7 with bare-metal installations of the aforementioned services. This summer we decided to migrate all of this to an on-premise [Nomad](https://www.nomadproject.io/ cluster) cluster.

Follow us on